Application performance is the basis of a good user experience. This makes monitoring performance an important challenge if you’re responsible for managing applications. But finding the right tooling to do this can be quite a journey...

When managing a complex IT landscape, you are always trying to combine agility and stability. To control risk while innovating, you need solutions and tools that can grow with your needs while not requiring constant maintenance. This also applies to your analytics solutions.

The obvious solution...

Any cloud stack now offers a proprietary analytics solution. So, if your primary cloud provider is Microsoft or Amazon, it seems like a logical step to invest in the analytics solution that comes with their stack. But while these providers all present themselves as one-stop shops that cater to your every need, their tooling may not fit your needs. Non-functional needs, that is. Functionally, the differences between the leading performance analytics tools are slight. When you are selecting a tool, most of them will probably fulfill your requirements. It is much more important to consider who will be using the tool and whether it fits your specific way of working. It’s the non-functional requirements that are key here.

Other requirements

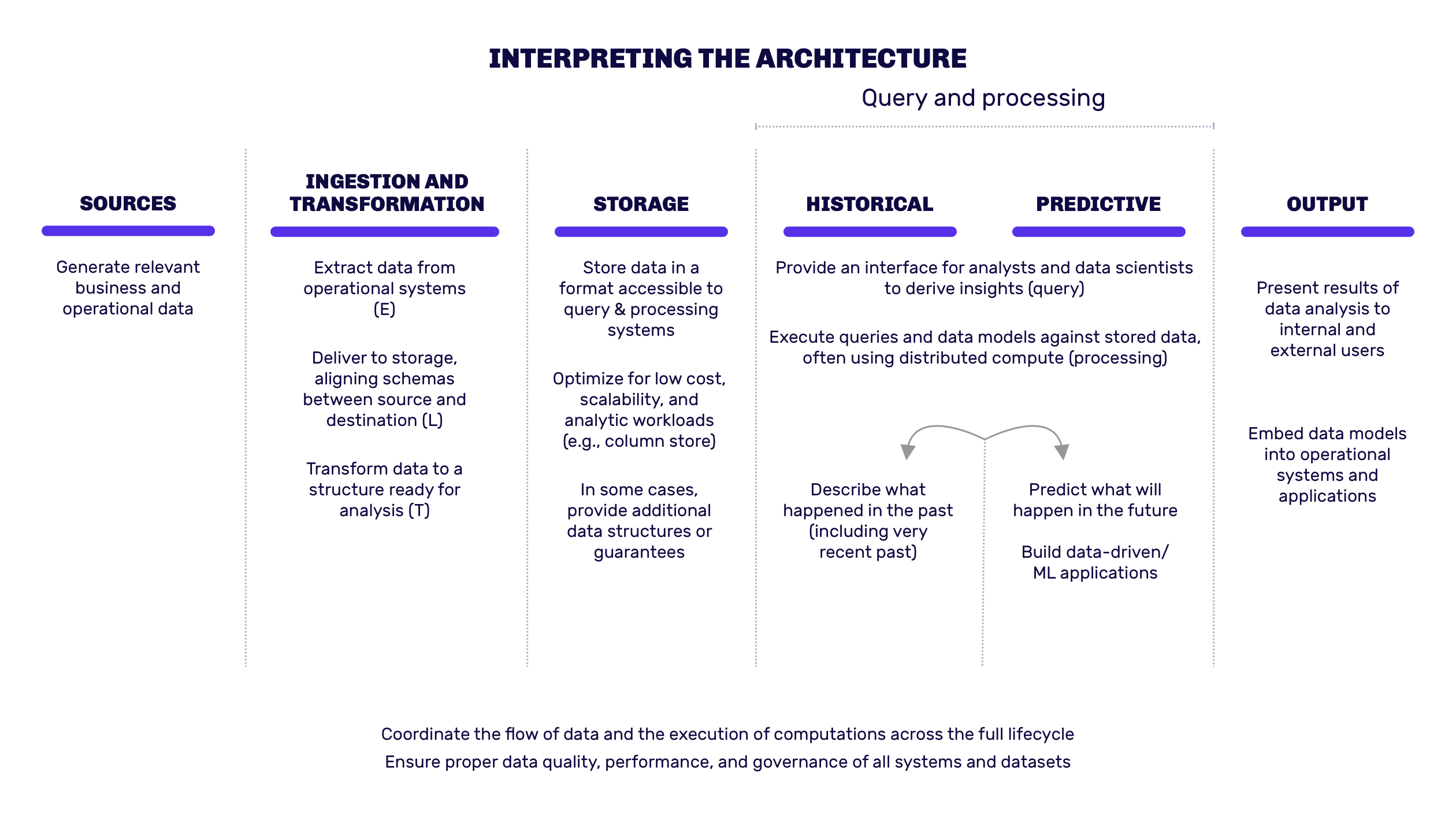

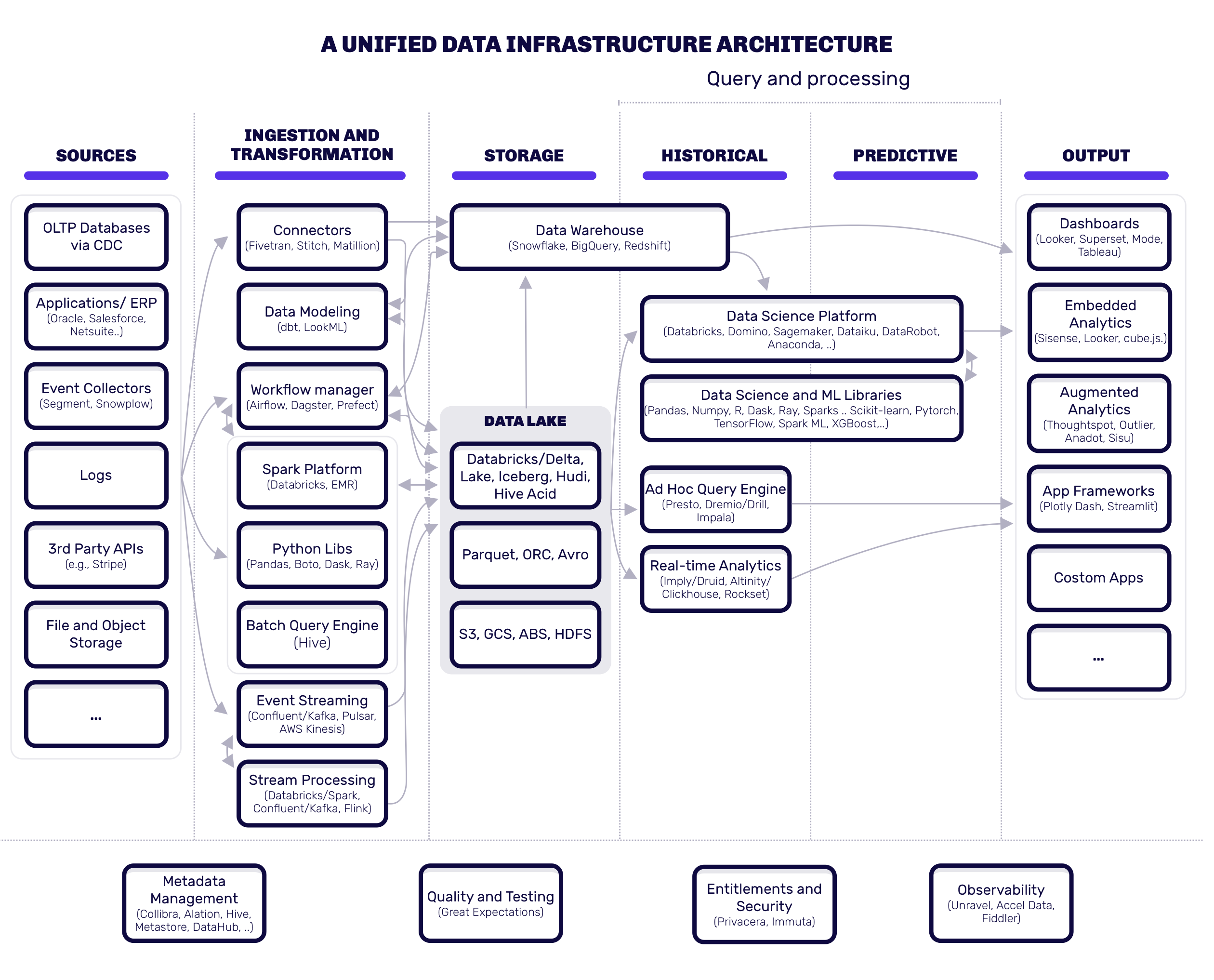

Another factor to consider is that performance analytics may not be the only use you have for a business intelligence or analytics tool. Marketing and Sales departments focusing on growth and conversions will have their own requirements from a solution, and you may want to serve all these use cases with a single tool. Also, consider that data infrastructure serves two purposes. The first, of course, is to help business leaders make better decisions through the use of data (analytic use cases). But when building your infrastructure, don’t forget the second purpose: to incorporate data intelligence into customer-facing applications, including via machine learning (operational use cases).

Implementation speed is key

Time-to-live and speed of implementation are essential to the success of an analytics tool. For an organization to be agile and respond to change, analytics tooling must also be adaptive. As the entire software world is transitioning to no-code and low-code, the way we implement, adopt and use software tools is also changing in a fundamental way. These days, a tool is a platform that enables users to implement the functionality they need. This can be a real gamechanger for your performance analytics and business intelligence, as it enables users to build their own dashboards, reports and visualizations.

Who will be using your analytics tool?

But this may not be what you want at all. The amount of configurability you want depends entirely on who will be using the solution. Are you indeed aiming to let business users build their own user-friendly dashboards? Or do you have technical users that want as much of the raw data as possible and do their own data analysis? What sort of support can you give your users? Do you have developers available to build them new dashboards when they need them? These are important questions to answer, before you commit to a solution.

What will they use it for?

‘Analytics’ is a pretty broad term, covering everything from real-time, AI-powered decision making to ad-hoc, question-based analysis of historical data. Which sort of analytics do you want to support and how will this add value in your specific situation? Are you ready to start getting value from the great promise in machine learning and predictive and prescriptive analytics? Do you need prescriptive analytics that not only present data, but also tell the user what action to take? Do you want to analyze real-time or just historical data?

Operational data snapshots, embedded analytics or complex curated data analyses are fundamentally different processes, that require different tooling and probably also infer different models for data governance and software maintenance. Some use cases may require a platform that can be programmed with Python. For building complex BI and process optimization queries, SQL support may be more important.

Determining what the purpose and scope of data visualizations is going to be can also help you determine which solutions will work best. To have non-technical users self-serve their dashboarding and reporting needs, you need to be looking at no-code or low-code platforms.

What does your IT landscape look like?

Any tool or suite of tools you select, will have to integrate with your databases, APIs and other data sources such as webhooks, web analytics or IoT devices. Also, most companies we work with do not limit themselves to using a single cloud solution. This creates a need for flexible analytics tools that can cover the entire landscape and not just one. Consider, for example, the Israel-based SiSense, that specializes in solutions for embedded analytics, providing functionality that lets users easily add data widgets to their existing applications. This makes connecting data, setting up analyses and integrating with every part of the IT landscape extremely easy and allows every user to build their own custom analytics environment.

Do you have specific security and privacy demands?

And then, there is the question of data security and privacy. It may not fit company policy or local law to use an analytics solution that stores data in the United States or in other countries outside the European Union. To get maximum control over your data, consider tools that only license the functionality of the software and can be installed on your own systems. These tools can usually be integrated into your own ecosystem more easily and being able to complete control data storage provides an extra level of confidence that they are secure.

This said, even determining what the applicable laws are can be a challenge. With cloud computing, it is not always clear where data are stored. Within the EU, the physical location is a decisive factor to determine which privacy rules apply. In other jurisdictions, other regulations may apply. This challenge becomes more difficult because of the volatility of data in the cloud. Data may be transferred from one location to the other regularly or may reside in multiple locations at a time. This makes it hard to determine applicable law and watch data flows.

At the same time, enterprises that use cloud service providers expect the privacy commitments they have made to their own customers and employees to be honored by the cloud service provider. It is advisable to negotiate a tailored contract for this with your cloud providers, in addition to controller and processor agreements. Having real-time knowledge of where your data are stored and how they can be transferred and accessed is important to determine applicable law. You also want to audit your cloud provider to see if data security is sufficient.

Don’t forget data validation!

Validating data is an aspect of analytics that is sometimes forgotten. But data quality is extremely important for effective analytics. Any dashboard, report or analysis can be rendered worthless if it is based on incorrect data. Errors or omissions can be introduced into data in any stage of data processing. There may be some error happening in your data transformation layer, in your data warehouse or your integration solution. Sometimes, data coming from applications or hardware is simply wrong or not transmitted at all, due to misconfiguration. Data validation is usually not a question of purchasing and deploying a tool. It requires a deep understanding of the data pipeline and the organization. Setting up a data validation and governance process should be an integral part of the adoption of performance analytics tooling. Realizing this, Microsoft has rolled out Azure Purview, a data management solution that allows you to gather data from all cloud and on-premises environments, while keeping track of its lineage and automatically categorizing metadata, ensuring data integrity.

Partnering up for guidance and knowledge

Which brings us to another important question, that can be overlooking while trying to find your way in the jungle that is analytics tooling: do you want just the tool, or do you want advice and guidance on how to deploy, adopt and use it? Working with a knowledgeable partner can really help you get the most from your software investment and will severely reduce the time-to-value of your shiny new analytics tool. We assist many organizations in getting their performance analytics on track and we usually find that our specific experience and knowledge help to get them up to speed quickly and get more value from their data.

Custom-built data apps

Speaking of what we do... Here at Triple, we are not shy to say that we are pretty good at developing apps, websites and cross-platform environments that provided personal experiences, tailored to individual users. For you, this means you don’t have to settle for analytics tooling that is available off the shelf. If you have specific needs, it may be easier to custom-build something that serves all your user groups with exactly the functionality they need. This might sound like a big job, but it doesn’t have to be. On the contrary. Most analytics tools have so many functionalities these days, that much of your implementation effort will be spent on deciding what not to use and how to get rid of it. Working with a complicated tool has your users and developers spending their cognitive powers on things they don’t need or want. A custom-built solution only has what you need. And it can also have functions that are not available anywhere else. Cost-wise, the initial investment may be larger, but there are no license fees and you will be sure that the right tooling is delivered to the right user quickly, seamlessly integrating with your IT landscape, work processes, user management, look & feel and branding.

And if you ever want to talk about performance analytics, we’re always here for you.